Microsoft Unleashes Maia 200: A Game-Changer for AI Inference

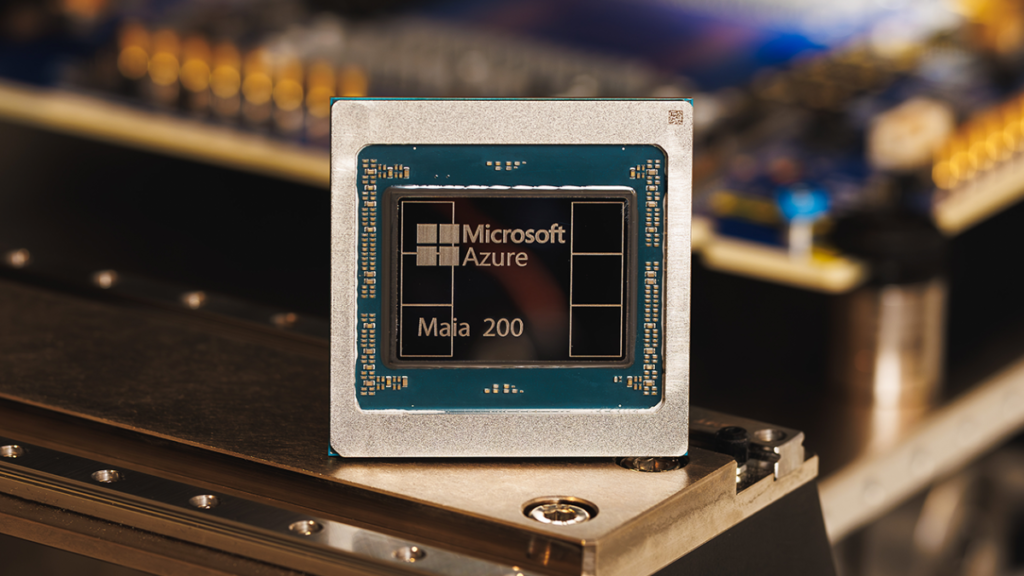

In a significant stride for artificial intelligence infrastructure, Microsoft has pulled back the curtain on its latest custom-designed silicon, the Maia 200 AI Accelerator. Dubbed a “silicon workhorse” by the tech giant, this powerful new chip is engineered to dramatically scale AI inference capabilities, promising a future where advanced AI models run with unprecedented speed and efficiency.

The Next Evolution in AI Processing

Building upon the foundation laid by its predecessor, the Maia 100, which debuted in 2023, the Maia 200 represents a substantial leap forward. Microsoft has meticulously crafted this chip to handle the most demanding AI workloads, ensuring faster processing and greater operational efficiency. Packed with over 100 billion transistors, the Maia 200 delivers an impressive punch: over 10 petaflops in 4-bit precision and approximately 5 petaflops of 8-bit performance. These figures underscore a formidable improvement, positioning the Maia 200 as a true powerhouse in the AI hardware landscape.

Understanding AI Inference: The Engine of Modern AI

To truly appreciate the Maia 200’s significance, it’s crucial to understand the concept of AI inference. While much attention is often given to the “training” phase of AI models—where vast datasets are processed to teach an AI system—inference refers to the subsequent process of actually *running* that trained model to make predictions or generate outputs. As AI technologies mature and become integrated into more real-world applications, the costs associated with inference have emerged as a critical factor in overall operational expenses for AI companies. This shift has ignited a focused effort across the industry to optimize inference processes, making them more cost-effective and energy-efficient.

Maia 200: Microsoft’s Answer to AI Optimization

Microsoft’s vision for the Maia 200 is clear: to be a cornerstone of this optimization drive. By streamlining AI business operations, minimizing disruptions, and curbing power consumption, the Maia 200 aims to make the deployment of complex AI models more practical and sustainable. The company boldly states that “one Maia 200 node can effortlessly run today’s largest models, with plenty of headroom for even bigger models in the future,” highlighting its forward-looking design and robust capabilities.

The Great Chip Race: Tech Giants Forge Their Own Silicon Paths

The unveiling of the Maia 200 also signals Microsoft’s prominent role in a broader, strategic trend sweeping through the tech industry: the increasing move by major players to design their own custom silicon. This shift is largely driven by a collective desire to reduce reliance on third-party GPU manufacturers, particularly NVIDIA, whose cutting-edge graphics processing units have become indispensable for AI advancements. Developing proprietary chips allows these tech giants to tailor hardware precisely to their unique software ecosystems and AI workloads, potentially leading to greater efficiency, lower costs, and enhanced control.

Beyond NVIDIA: A Landscape of Innovation

Microsoft is not alone in this ambitious endeavor. Google, for instance, has long invested in its Tensor Processing Units (TPUs), specialized AI accelerators offered as cloud compute power rather than standalone chips. Amazon, too, has entered the fray with its Trainium AI accelerator, recently launching its latest iteration, the Trainium3, in December. These custom chips serve a vital function: by offloading intensive compute tasks that would otherwise fall to NVIDIA GPUs, they offer a strategic pathway to mitigate hardware expenses and optimize performance within their respective cloud infrastructures.

Maia 200’s Competitive Edge

In this burgeoning competitive landscape, Microsoft is confidently positioning the Maia 200 as a frontrunner. In its recent press release, the company underscored Maia’s superior performance metrics, noting that it delivers three times the FP4 performance of Amazon’s third-generation Trainium chips. Furthermore, the Maia 200 boasts FP8 performance that surpasses Google’s seventh-generation TPU, making a clear statement about Microsoft’s ambition to lead in AI hardware innovation.

Real-World Impact and Future Horizons

The Maia 200 isn’t just a promise for the future; it’s already hard at work within Microsoft’s vast AI ecosystem. The chip is actively powering the sophisticated AI models developed by the company’s Superintelligence team and is instrumental in supporting the operations of Copilot, Microsoft’s intelligent chatbot.

Looking ahead, Microsoft is keen to broaden the Maia 200’s reach. The company has extended invitations to a diverse group—including developers, academics, and pioneering AI laboratories—to engage with its Maia 200 software development kit. This strategic move aims to foster innovation and accelerate the adoption of Maia 200 across a wide array of AI-driven workloads, cementing its place as a pivotal technology in the ongoing AI revolution.