Beyond the Scan: Unpacking DHS’s Controversial Mobile Fortify Facial Recognition App

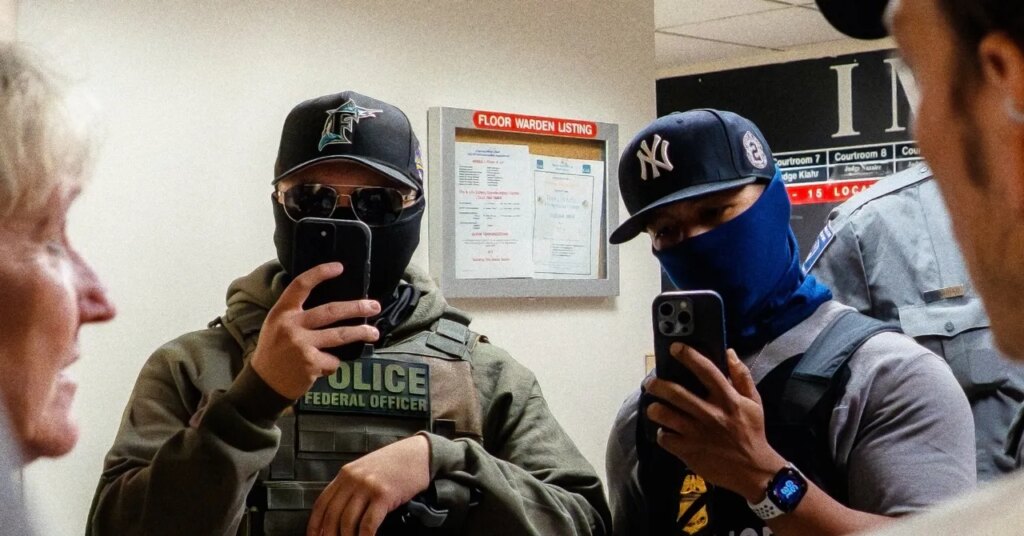

The Department of Homeland Security (DHS) recently pulled back the curtain on “Mobile Fortify,” a powerful facial recognition application currently being leveraged by federal immigration agents to identify individuals in the field. This revelation, detailing the app’s intricate capabilities and its undisclosed vendor, emerged as part of DHS’s mandated 2025 AI Use Case Inventory, a periodic public disclosure of artificial intelligence tools employed by federal agencies. The new insights have ignited a fresh wave of scrutiny over the expansive reach of biometric surveillance, impacting both undocumented immigrants and U.S. citizens alike.

Unveiling Mobile Fortify: A Closer Look at Federal Surveillance

The DHS inventory sheds light on two distinct entries for Mobile Fortify, reflecting its deployment across both Customs and Border Protection (CBP) and Immigration and Customs Enforcement (ICE). Both agencies categorize the app as being in the “deployment” phase, indicating its active use in operations. CBP confirmed that Mobile Fortify became fully operational in early May of the previous year, with ICE gaining access a month later, on May 20, 2025. Intriguingly, ICE’s operational date predates the initial public reports of the app’s existence by 404 Media, raising questions about transparency.

The Tech at Play: Behind the Scenes with NEC’s AI

A significant revelation from the inventory was the identification of NEC as the app’s primary vendor, a detail previously kept from public knowledge. NEC prominently features a facial recognition solution called “Reveal” on its website, boasting capabilities for both one-to-many searches against vast databases and precise one-to-one matches. While CBP attributes the app’s development solely to NEC, ICE notes a partial in-house contribution. Further reinforcing NEC’s pivotal role, a substantial $23.9 million contract between the company and DHS, spanning 2020 to 2023, outlined the use of NEC’s biometric matching products across “unlimited facial quantities, on unlimited hardware platforms, and at unlimited locations.” NEC has yet to respond to inquiries regarding these revelations.

At its core, Mobile Fortify serves as a critical tool for rapidly confirming identities, particularly in challenging field conditions where agents often operate with limited information and disparate data systems. The app is equipped to capture facial biometrics, “contactless” fingerprints, and images of identity documents. This sensitive data is then transmitted to CBP for integration into government biometric matching systems. These sophisticated systems employ artificial intelligence algorithms to cross-reference faces and fingerprints against existing records, subsequently returning potential matches alongside biographic information. Additionally, ICE utilizes the app to extract text from identity documents, enabling “additional checks.” It’s important to note that ICE clarifies it does not directly own or interact with the underlying AI models; these remain under CBP’s purview.

Troubling Timelines and Procedural Gaps

The source data for Mobile Fortify’s AI training and evaluation also raises eyebrows. CBP indicated that “Vetting/Border Crossing Information/Trusted Traveler Information” was instrumental in either training, fine-tuning, or evaluating the app’s performance. However, the agency refrained from specifying which category applied and did not respond to requests for clarification.

Data Sources and Disturbing Echoes

The mention of “Trusted Traveler Information” is particularly concerning. CBP’s Trusted Traveler Programs encompass widely used services like TSA Precheck and Global Entry. Recent declarations have brought to light potential repercussions. A Minnesota woman, for instance, reported the revocation of her Global Entry and TSA Precheck privileges after an interaction with a federal agent who openly discussed using “facial recognition.” In a separate legal filing by the state of Minnesota, an individual detained by federal agents recounted an officer’s ominous warning: “Whoever is the registered owner [of this vehicle] is going to have a fun time trying to travel after this.” These incidents suggest a potentially far-reaching impact of such biometric tools on individuals’ ability to travel and their basic civil liberties.

A glaring discrepancy has also emerged regarding mandated oversight protocols. While CBP asserts the existence of “sufficient monitoring protocols” for the app, ICE acknowledges that the development of such protocols is still “in progress.” Furthermore, ICE states it will identify potential impacts during a future AI impact assessment. This timeline directly contradicts guidance from the Office of Management and Budget (OMB), which stipulates that agencies must complete an AI impact assessment *before* deploying any high-impact use case. Both CBP and ICE themselves classify Mobile Fortify as “high-impact” and concede it is already “deployed.” The implications of deploying such a powerful, high-impact tool without fully established monitoring or prior impact assessments are significant.

The Human Cost: Errors, Oversight, and Absent Safeguards

The consequences of an erroneous match by facial recognition technology can be devastating. 404 Media previously highlighted a case where a woman was unjustly detained after being misidentified twice by the Mobile Fortify app, underscoring the real-world dangers of algorithmic error.

A Call for Accountability and Transparency

In a worrying echo of the procedural gaps, ICE admits that the development of an appeals process for individuals affected by the app is still “in-progress.” Similarly, the agency’s efforts to “consult and incorporate feedback from end users of this AI use case and the public” are also listed as incomplete. The lack of a clear, established mechanism for challenging misidentifications or providing public input on a system with such profound implications is a critical failing.

Notably, despite repeated inquiries, DHS, CBP, and ICE have remained silent, offering no responses to requests for comment on these pressing issues. This collective silence further fuels concerns about accountability and transparency surrounding the deployment and impact of Mobile Fortify.

The ongoing revelations about Mobile Fortify paint a picture of rapidly deployed, powerful surveillance technology with potentially inadequate safeguards and a troubling lack of transparency. As federal agencies increasingly integrate AI into their operations, the need for robust oversight, public discourse, and unwavering accountability becomes paramount to protect civil liberties in the digital age.