Keep knowledgeable with free updates

Merely signal as much as the Synthetic intelligence myFT Digest — delivered on to your inbox.

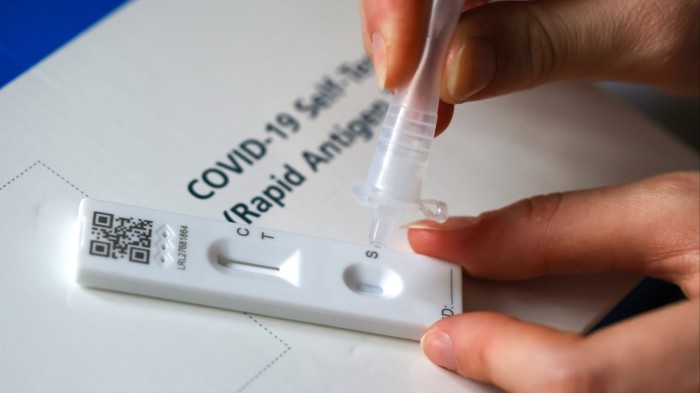

Considered one of my kinfolk heard some unusual tales when engaged on a healthcare helpline in the course of the Covid pandemic. Her job was to assist callers full the speedy lateral move assessments used thousands and thousands of instances throughout lockdown. However some callers have been clearly confused by the process. “So, I’ve drunk the fluid within the tube. What do I do now?” requested one.

That consumer confusion could also be an excessive instance of a typical technological drawback: how peculiar folks use a services or products in the true world could diverge wildly from the designers’ intentions within the lab.

Typically that misuse could be deliberate, for higher or worse. For instance, the campaigning organisation Reporters With out Borders has tried to guard free speech in a number of authoritarian international locations by hiding banned content material on the Minecraft online game server. Criminals, in the meantime, have been utilizing dwelling 3D printers to fabricate untraceable weapons. Extra typically, although, misuse is unintentional, as with the Covid assessments. Name it the inadvertent misuse drawback, or “imp” for brief. The brand new gremlins within the machines would possibly effectively be the imps within the chatbots.

Take the final function chatbots, comparable to ChatGPT, which can be being utilized by 17 per cent of People not less than as soon as a month to self-diagnose well being considerations. These chatbots have superb technological capabilities that might have appeared like magic just a few years in the past. When it comes to scientific information, triage, textual content summarisation and responses to affected person questions, the perfect fashions can now match human docs, based on numerous assessments. Two years in the past, for instance, a mom in Britain efficiently used ChatGPT to determine tethered twine syndrome (associated to spina bifida) in her son that had been missed by 17 docs.

That raises the prospect that these chatbots might sooner or later turn out to be the brand new “entrance door” to healthcare supply, bettering entry at decrease value. This week, Wes Streeting, the UK’s well being minister, promised to improve the NHS app utilizing synthetic intelligence to offer a “physician in your pocket to information you thru your care”. However the methods during which they’ll greatest be used are usually not the identical as how they’re mostly used. A latest research led by the Oxford Web Institute has highlighted some troubling flaws, with customers struggling to make use of them successfully.

The researchers enrolled 1,298 individuals in a randomised, managed trial to check how effectively they may use chatbots to answer 10 medical eventualities, together with acute complications, damaged bones and pneumonia. The individuals have been requested to determine the well being situation and discover a advisable plan of action. Three chatbots have been used: OpenAI’s GPT-4o, Meta’s Llama 3 and Cohere’s Command R+, which all have barely completely different traits.

When the check eventualities have been entered straight into the AI fashions, the chatbots accurately recognized the circumstances in 94.9 per cent of circumstances. Nevertheless, the individuals did far worse: they offered incomplete info and the chatbots typically misinterpreted their prompts, ensuing within the success charge dropping to simply 34.5 per cent. The technological capabilities of those fashions didn’t change however the human inputs did, resulting in very completely different outputs. What’s worse, the check individuals have been additionally outperformed by a management group, who had no entry to chatbots however consulted common engines like google as a substitute.

The outcomes of such research don’t imply we must always cease utilizing chatbots for well being recommendation. But it surely does recommend that designers ought to pay way more consideration to how peculiar folks would possibly use their providers. “Engineers are inclined to suppose that folks use the know-how wrongly. Any consumer malfunction is due to this fact the consumer’s fault. However excited about a consumer’s technological expertise is prime to design,” one AI firm founder tells me. That’s notably true with customers looking for medical recommendation, a lot of whom could also be determined, sick or aged folks exhibiting indicators of psychological deterioration.

Extra specialist healthcare chatbots could assist. Nevertheless, a latest Stanford College research discovered that some extensively used remedy chatbots, serving to tackle psychological well being challenges, may also “introduce biases and failures that would lead to harmful penalties”. Researchers recommend that extra guardrails must be included to refine consumer prompts, proactively request info to information the interplay and talk extra clearly.

Tech corporations and healthcare suppliers must also do way more consumer testing in real-world circumstances to make sure their fashions are used appropriately. Growing highly effective applied sciences is one factor; studying find out how to deploy them successfully is sort of one other. Beware the imps.