## When the Cyber Guardian Becomes the Breach: CISA Official’s ChatGPT Files Raise Alarms

In a development that has sent ripples through the digital security landscape, the acting head of the U.S. Cybersecurity and Infrastructure Security Agency (CISA) reportedly uploaded sensitive government contracting documents, explicitly marked “for official use only,” to the public version of ChatGPT. This incident, brought to light by Politico, underscores the growing complexities and inherent risks of integrating advanced AI tools into government operations.

### The Unfolding Revelation: A Breach of Protocol

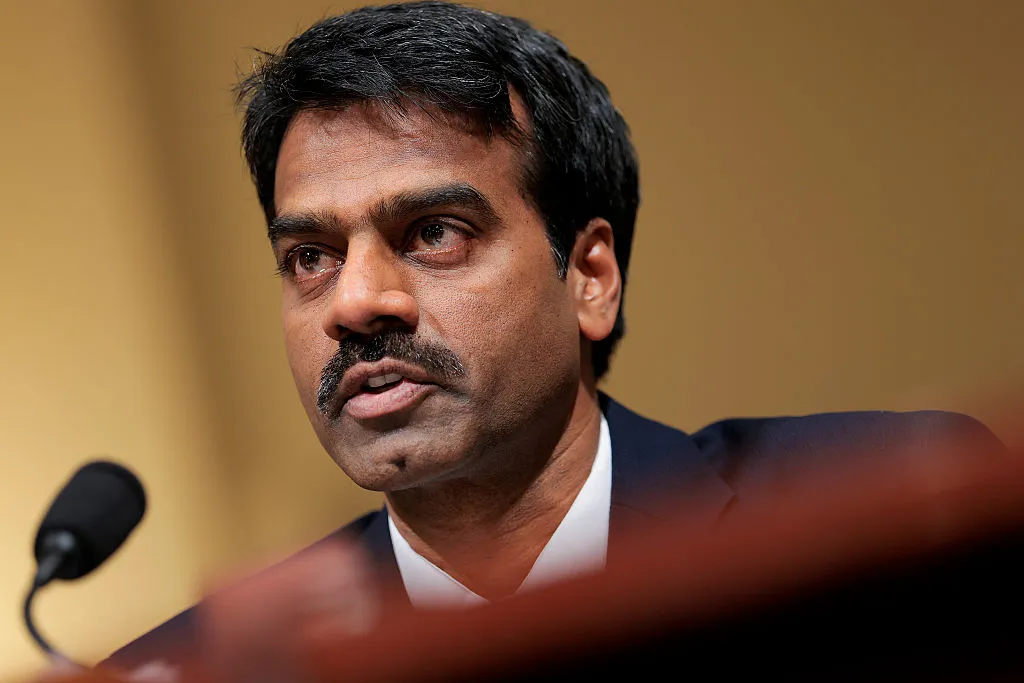

The reports indicate that Madhu Gottumukkala, who serves as CISA’s acting director and was appointed during the Trump administration, triggered a cascade of automated security warnings. These sophisticated systems are specifically designed to act as digital sentinels, preventing the unauthorized exfiltration or accidental exposure of critical government files from federal networks. The very fact that these safeguards were activated by the agency’s top cybersecurity official highlights a profound lapse in judgment and adherence to established protocols.

### The AI Conundrum: Why Public LLMs Are a Risk

At the heart of this controversy lies a critical concern: the interaction between internal government data and publicly accessible large language models (LLMs) like ChatGPT. Even documents deemed “unclassified” but designated for internal use carry a degree of sensitivity. When such information is uploaded to a public LLM, it becomes part of the model’s training data.

This process creates a tangible risk: the LLM could inadvertently learn, synthesize, and potentially reproduce fragments or insights derived from these sensitive files in response to future user queries. This effectively compromises information that was never intended for public consumption, blurring the lines between internal government operations and the vast, open digital commons.

### Exceptions, Explanations, and Prior Scrutiny

Interestingly, officials noted that Gottumukkala was reportedly granted an exception to utilize ChatGPT earlier in his tenure, at a time when other CISA employees faced prohibitions. This raises questions about the consistency of internal policies and the rationale behind such a high-level exemption. Following the security alerts, officials within the Department of Homeland Security (DHS), CISA’s parent agency, initiated an inquiry to assess any potential harm to national security.

A CISA spokesperson, responding to Politico’s queries, described Gottumukkala’s use of ChatGPT as “short-term and limited,” an explanation that some may find insufficient given the gravity of the data involved.

This incident also brings Gottumukkala’s past into sharper focus. Prior to his appointment at CISA, he served as South Dakota’s chief information officer under then-Governor Kristi Noem. More notably, following his transition to CISA, reports emerged that Gottumukkala failed a counterintelligence polygraph test. While Homeland Security later dismissed this specific event as “unsanctioned,” the episode was reportedly followed by the suspension of six career staff members from accessing classified information – a sequence of events that previously cast a shadow over internal security practices and leadership decisions within the agency.

### Looking Ahead: Navigating AI’s Ethical Minefield in Government

This episode serves as a powerful reminder of the delicate balance required as government agencies increasingly explore and adopt artificial intelligence technologies. While AI offers immense potential for efficiency and innovation, its deployment must be rigorously governed by clear, enforceable policies that prioritize data security, privacy, and national interests. The line between technological advancement and critical infrastructure risk is fine, and for the nation’s premier cybersecurity agency, the stakes could not be higher. It’s a wake-up call for robust training, clearer guidelines, and unwavering accountability when it comes to safeguarding sensitive information in the age of AI.